Abstract

Electron and photon triggers covering transverse energies from 5 \(\text {GeV }\) to several \(\text {TeV }\) are essential for the ATLAS experiment to record signals for a wide variety of physics: from Standard Model processes to searches for new phenomena in both proton–proton and heavy-ion collisions. To cope with a fourfold increase of peak LHC luminosity from 2015 to 2018 (Run 2), to \(2.1 \times 10^{34}\,\hbox {cm}^{-2}\hbox { s}^{-1}\), and a similar increase in the number of interactions per beam-crossing to about 60, trigger algorithms and selections were optimised to control the rates while retaining a high efficiency for physics analyses. For proton–proton collisions, the single-electron trigger efficiency relative to a single-electron offline selection is at least 75% for an offline electron of 31 \(\text {GeV }\), and rises to 96% at 60 \(\text {GeV }\); the trigger efficiency of a 25 \(\text {GeV }\) leg of the primary diphoton trigger relative to a tight offline photon selection is more than 96% for an offline photon of 30 \(\text {GeV }\). For heavy-ion collisions, the primary electron and photon trigger efficiencies relative to the corresponding standard offline selections are at least 84% and 95%, respectively, at 5 \(\text {GeV }\) above the corresponding trigger threshold.

Similar content being viewed by others

1 Introduction

Electrons and photons are present in many Standard Model processes as well as in searches for phenomena beyond the Standard Model. The ATLAS physics programme relies on an efficient trigger system to record a highly signal-rich subset of all collision events produced by the Large Hadron Collider (LHC) at CERN.

The ATLAS Collaboration has published several electron and photon trigger performance results since the start of data-taking: the early 2010 data are covered in Ref. [1], the 2011 data in Ref. [2] and the 2015 data in Ref. [3]. This paper addresses the evolution of performance of the electron and photon triggers from 2015 to 2018 (Run 2). The major challenge for the trigger in this period was the need to maintain excellent performance for the ATLAS physics programme while adapting to a nearly fourfold increase in the LHC peak luminosity and in the number of interactions per beam-crossing.

This paper is organised as follows. The ATLAS detector is described in Sect. 2. The trigger system is introduced in Sect. 3. Section 4 introduces the data sets used in this publication. The following two sections detail electron and photon reconstruction and identification at the analysis level (offline) and the trigger level (online). Section 7 presents the techniques used to measure the trigger performance. The performance of the photon and electron triggers from 2015 to 2018 is described in Sects. 8–11. The data quality monitoring is described in Sect. 12, and the conclusions are presented in Sect. 13.

2 The ATLAS detector

The ATLAS detector [4,5,6] is a multipurpose detector designed to observe particles produced in high-energy proton–proton (pp) and heavy-ion (HI) collisions. It is composed of a tracking detector in the innermost region around the interaction point, surrounded by calorimeters and muon chambers.

The inner tracking detector (ID) is immersed in a 2 T magnetic field produced by a thin superconducting solenoid, and provides precise reconstruction of charged-particle tracks in a pseudorapidity rangeFootnote 1 \(|\eta |<2.5\). The innermost part consists of a silicon pixel detector with four layers. The layer closest to the beam-pipe, the insertable B-layer, was installed before Run 2 and provides high-resolution hits in three-dimensions with pixels at a radius of 3.3 cm to improve the tracking performance. A silicon microstrip tracker surrounds the pixel detector with typically four layers of sensor modules. Each module is composed of multiple pairs of sensors with a stereo-angle to measure three-dimensional hit positions. The outermost region of the tracker in the range \(|\eta |<2.0\) is covered by a transition radiation tracker (TRT). It consists of straw drift tubes filled with a gas mixture of 70% Xe, 27% \(\hbox {CO}_2\) and 3% \(\hbox {O}_2\) interleaved with polypropylene/polyethylene transition radiators creating transition radiation for particles with a large Lorentz factor. This radiation is absorbed by the Xe-based gas mixture, discriminating electrons from hadrons over a wide energy range. Some of the TRT modules instead contain a gas mixture of 70% Ar, 28.5% \(\hbox {CO}_2\) and 1.5% \(\hbox {O}_2\) as a mitigation for gas leaks that cannot be repaired without an invasive opening of the inner detector. At the end of Run 2 data-taking the two innermost TRT barrel layers, i.e. about half of the modules in \(|\eta |<0.6\), and 3 (2) out of 14 endcap wheels in \(-2<\eta <-1\) (\(1<\eta <2\)) were running with the argon-based gas mixture. The presence of this gas mixture is taken into account in the simulation and the corresponding loss in identification power is partially mitigated by a dedicated TRT particle-identification algorithm [7]. For charged particles with transverse momenta \(>0.5\) \(\text {GeV }\) the TRT provides typically 35 hits per track.

The calorimeter system has both electromagnetic (EM) and hadronic components. It is designed to provide a full \(\phi \) coverage and covers the pseudorapidity range \(|\eta |<4.9\), with finer granularity over the region matched to the inner detector. The EM calorimeter is a lead/liquid-argon (LAr) sampling calorimeter with an accordion-geometry. It is divided into two half-barrels (\(-1.475<\eta <0\) and \(0<\eta <1.475\)) and two endcap components (\(1.375<|\eta |<3.2\)). The transition region between the barrel and endcaps (\(1.37<|\eta |<1.52\)) contains significant additional inactive material [4]. Over the region devoted to precision measurements (\(|\eta |<2.5\), excluding the transition region), the EM calorimeter is segmented into three layers longitudinal in shower depth. The first layer consists of strips finely grained in the \(\eta \) direction, offering excellent discrimination between isolated photons and pairs of closely spaced photons coming from \(\pi ^{0}\rightarrow \gamma \gamma \) decay. For electrons and photons with high transverse energy, most of the energy is collected in the second layer, which has a lateral granularity of \(0.025\times 0.025\) in \((\eta , \phi )\) space. The third layer provides measurements of energy deposited in the tails of the shower. In front of the accordion calorimeter, a thin presampler layer, covering the pseudorapidity interval \(|\eta |<1.8\), is used to correct for energy loss upstream of the calorimeter.

Three hadronic calorimeter layers surround the EM calorimeter. For electrons and photons, they provide additional background discrimination through measurements of hadronic energy. The barrel hadronic calorimeter (\(|\eta | < 1.7\)) is an iron/scintillator tile sampling calorimeter with wavelength-shifting fibers. For the hadronic endcaps, copper/LAr calorimeters are used. The forward regions (FCal) are instrumented with copper–tungsten/LAr calorimeters for both the EM and hadronic energy measurements up to \(|\eta | = 4.9\). The LAr-based detectors are housed in one barrel and two endcap cryostats.

The outermost layers of ATLAS consist of an external muon spectrometer (MS) in the pseudorapidity range \(|\eta |<2.7\), incorporating three large toroidal magnet assemblies with eight coils each. The field integral of the toroids ranges between 2.0 and 6.0 Tm for most of the acceptance. The MS includes precision tracking chambers and fast detectors for triggering.

3 ATLAS trigger system

A two-level trigger system [3] is used to select events of interest. The first-level (L1) trigger, implemented in custom hardware, utilises coarser-granularity signals from the calorimeters and the muon chambers to reduce the event rate from the \({40}\hbox { MHz}\) bunch crossing rate to below \({100}\hbox { kHz}\); it has \(2.5\,\upmu \hbox {s}\) to decide which events to keep to satisfy this factor 400 reduction. L1 also defines regions-of-interest (RoIs) which have calorimeter clusters with high transverse energy, \(E_{\text {T}}\) , or muon tracks in the muon chambers.

Events accepted by L1 are processed by the high-level trigger (HLT), based on algorithms implemented in software which must further reduce the number of events recorded to disk to an average rate of about \({1}\hbox { kHz}\) within a few seconds. The HLT uses fine-granularity calorimeter information, precision measurements from the muon spectrometer and tracking information from the ID, which are not available at L1. HLT reconstruction can be executed either within the RoIs identified at L1 or for the full detector (full-scan). The selection of particle candidates by the HLT is performed at each step, so that if it fails at a certain step, subsequent steps are not executed. This is essential to reduce the time needed by the HLT to reconstruct the event and make a decision.

A sequence of L1 and HLT trigger algorithms is called a ‘trigger’ and is meant to identify one or more particles of a given type and a given threshold of transverse energy or momentum. For example, electron and photon triggers are meant to select events with one or more electrons or photons in the detector. The configuration of the trigger is controlled by the ‘trigger menu’, which defines a full list of the L1 and HLT triggers and their configurations. Menu composition and trigger thresholds are optimised for the LHC running conditions (beam type, luminosity, etc.) to fit within the event acceptance rate and the bandwidth constraints of the data acquisition system of the ATLAS detector as well as the offline storage constraints.

In addition to the triggers described above, there are ‘rerun’ triggers which never accept an event on their own, but are configured to run only on the events accepted by other triggers, and their decision is recorded for offline use. This information is used for studies of the trigger efficiency, which is calculated separately for each object (leg) of the multi-object triggers.

Trigger thresholds and identification criteria have to be modified sometimes to maintain a stable output rate. To ensure an optimal trigger menu within the rate constraints of a given LHC luminosity, prescale factors can be applied to both the L1 and HLT triggers independently and configured during data-taking. They allow the experiment to either disable triggers completely or to set the fraction of events that may be accepted by them.

4 Data sets and simulation samples

The results described in this paper use the full pp collision data set recorded by ATLAS between 2015 and 2018 with the LHC operating at a centre-of-mass energy of \(\sqrt{s}=13\,\) \(\text {TeV }\). The maximum instantaneous luminosities increased by a factor of four during the four years of Run 2, resulting in an increase in the average number of interactions per bunch crossing, \(\langle \mu \rangle \), also referred to as ‘pile-up’. In addition to pp data, the heavy-ion (HI) physics programme is realised for one month per year, typically starting in November. During it, the LHC provides either lead–lead (PbPb) ion collisions, or special reference runs with either low-pile-up pp or proton–lead (pPb) ion collisions. The per-year values of maximum instantaneous luminosity, pile-up and integrated luminosity after requiring stable beam conditions and a functional detector are summarised in Table 1 for the standard pp collisions and in Table 2 for the HI programme.

Samples of simulated \(Z\rightarrow ee\) and \(W\rightarrow e\nu \) decays are used to benchmark the expected electron trigger efficiencies and to optimise the electron identification criteria. Powheg-Box v1 Monte Carlo (MC) generator [10,11,12,13] is used for the simulation of the hard-scattering in these samples. It is interfaced to Pythia 8.186 [14] for the modelling of the parton shower, hadronisation, and underlying event (UE), with parameters set according to the AZNLO tune [15]. The CT10 PDF set [16] is used for the hard-scattering processes, whereas the CTEQ6L1 PDF set [17] is used for the parton shower. The effect of QED final-state radiation is simulated with Photos++ (v3.52) [18, 19]. The EvtGen v1.2.0 program [20] is used to decay bottom and charm hadrons. For optimisation of the low-\(E_{\text {T}}\) electron selection, \(J/\psi \rightarrow ee\) samples are used. These were generated with Pythia 8.186, the A14 set of tuned parameters [21], and the CTEQ6L1 PDF set for both the hard-scattering processes and the parton shower. For high-\(E_{\text {T}}\) electron trigger studies, a MC event sample for the \(gg\rightarrow \mathrm {radion\,(3~\text {TeV })}\rightarrow VV\rightarrow ee qq\) process was produced with MadGraph5-2.6.0 [22] interfaced to Pythia 8.212.

Background samples for electron processes were simulated with two-to-two processes in Pythia 8.186 with the A14 set of tuned parameters and NNPDF23LO [23]. These processes include multijet production, \(qg\rightarrow q\gamma \), \(q{\bar{q}}\rightarrow q\gamma \), W / Z boson production (plus other electroweak processes) and top-quark production. A filter is applied to enrich the sample in electron backgrounds: selected events have particles (excluding muons and neutrinos) produced in the hard scatter with a summed transverse energy exceeding 17 \(\text {GeV }\) in a region of \(\Delta \eta \times \Delta \phi =0.1\times 0.1\). For the background studies, electrons from W / Z boson production are excluded using generator-level information.

For low-\(E_{\text {T}}\) photon trigger studies, samples of Z\(\rightarrow \ell \ell \gamma \) (\(\ell = e,\mu \)) events with transverse energy of the photon above 10 \(\text {GeV }\) were generated with Sherpa 2.1.1 [24] and the CT10 PDF set. For high-\(E_{\text {T}}\) photon trigger studies, MC samples of prompt-photon production generated with Pythia 8.186 are used. These samples include the leading-order \(\gamma \)+jet events from \(qg\rightarrow q\gamma \) and \(q{\overline{q}} \rightarrow g\gamma \) hard-scattering processes, as well as prompt photons from quark fragmentation in QCD dijet events. In addition to the samples detailed above, samples of a Standard Model Higgs boson produced via gluon–gluon fusion decaying into two photons were generated using Powheg-Box, NNLOPS implementation [25, 26], with the PDF4LHC15 PDF set [27], and interfaced to Pythia 8.186 for parton showering, hadronisation and the UE using the AZNLO set of tuned parameters.

Simulation of collision events includes the effect of multiple pp interactions in the same or neighbouring bunch crossings. The simulation of pile-up collisions was performed with Pythia 8.186 using the ATLAS A3 set of tuned parameters [28] and the NNPDF23LO PDF set, and weighted to reproduce the average number of pile-up interactions per bunch crossing observed in data. The generated events were passed through a full detector simulation [29] based on Geant 4 [30].

5 Offline object reconstruction and identification

The offline electron and photon reconstruction [31] uses dynamic, variable-size clusters of energy deposits measured in topologically connected EM and hadronic calorimeter cells [32], called topo-clusters, to recover energy from bremsstrahlung photons or from electrons from photon conversions. After applying initial position corrections and energy calibrations to the topo-clusters, they are matched to ID tracks re-fitted to account for bremsstrahlung, following the procedure described in Ref. [33], to reconstruct electron candidates. Topo-clusters not matched to any track or matched to conversion vertices are reconstructed as photon candidates. The electron and photon candidates to be used for analyses then have their energies recalibrated.

Identification of photon candidates in ATLAS relies on rectangular selection requirements based on calorimetric variables [31] which deliver good separation between prompt photons and fake signatures. Fake photon signatures can result either from non-prompt photons originating from the decay of neutral hadrons in jets, or from jets depositing a large energy fraction in the EM calorimeter. Two identification working points (WPs), ‘loose’ and ‘tight’, are defined for photons. Photon identification WPs are strictly inclusive, i.e. photons satisfying the ‘tight’ selection are a subset of those satisfying the ‘loose’ selection. The ‘loose’ selection is based on shower shapes in the second layer of the EM calorimeter and on the energy deposited in the hadronic calorimeter. In addition to the ‘loose’ selection criteria, the ‘tight’ selection uses information from the finely segmented first layer of the calorimeter. For a collection of photons radiated from leptons in Z decays and with \(E_{\text {T}}\) >25 \(\text {GeV }\), the efficiency integrated over 2015–2017 data sets of the ‘loose’ (‘tight’) selection is 98.9% (87.5%) for photons not matched to any track and 96.3% (87.6%) for photons matched to conversion vertices [31].

Prompt electrons entering the central region of the detector (\(|\eta | < 2.47\)) are selected using a likelihood-based (LH) identification [31], which exploits the characteristic features of energy deposits in the EM calorimeters (longitudinal and lateral shower shapes), track quality, track–cluster matching, and particle identification by the TRT. The LH probability density functions (pdfs) for the \(E_{\text {T}}\) range of 4.5 to 15 \(\text {GeV }\) are derived from \(J/\psi \rightarrow ee\) and for \(E_{\text {T}}\) \(\,>15\) \(\text {GeV }\) from \(Z\rightarrow ee\) events as described in Ref. [31]. Different pdfs are obtained for each identification quantity in separate bins in electron-candidate \(E_{\text {T}}\) and \(\eta \). To ensure a smooth variation of the electron identification efficiency with the electron \(E_{\text {T}}\), the discriminant requirements are varied in finer bins than those defined for the pdfs and, at the \(E_{\text {T}}\) bin boundaries, a linear interpolation between the neighbouring bins in \(E_{\text {T}}\) is used to determine both the pdf values and the discriminant requirements at the bin boundaries. This procedure is referred to as ‘smoothing’. The discriminant threshold is also adjusted linearly as a function of the number of reconstructed vertices to yield a stable rejection of background electrons. Three operating points, corresponding to increasing threshold values for the LH discriminant, are defined (identification efficiencies quoted are averages for electroweak processes integrated over 2015–2017 data sets): ‘loose’ (93\(\%\)), ‘medium’ (88\(\%\)) and ‘tight’ (\(80\%\)) [31].

Muon candidates, used in photon performance studies, are identified by matching ID tracks to tracks reconstructed in the muon spectrometer [34].

To reduce backgrounds from misidentified jets and from light- and heavy-flavour hadron decays inside jets, photon and lepton candidates are often required to be isolated. This isolation selection is specific to the analysis topology. The calorimeter isolation \(E_\mathrm {T}^\text {iso}\) is computed as the sum of transverse energies of topo-clusters in the calorimeters, in a cone around the candidate. The energy deposited by the photon or lepton candidate and the contributions from the UE and pile-up are subtracted on an event-by-event basis [35]. The track isolation variable, \(p_\mathrm {T}^\text {iso}\) is obtained by summing the scalar \(p_{\text {T}}\) of good-quality tracks in a cone around the candidate; good tracks are defined here as having \(p_{\text {T}}\) \(>1\) \(\text {GeV }\) and a distance of closest approach to the primary vertex along the beam axis \(|z_0\sin \theta |<3\) mm, and exclude the tracks associated with the photon conversion or the lepton candidate. The exact definitions of a few WPs used in this paper are provided in Table 3 and described in detail in Refs. [31, 34].

6 Trigger reconstruction and identification of photons and electrons

Photon and electron reconstruction at the HLT stage is performed on each EM RoI provided by L1, which satisfies \(E_{\text {T}}\) and isolation requirements as specified by the trigger menu. It proceeds in a series of sequential steps as shown in Fig. 1. In the HLT, fast algorithms are executed first, allowing precision algorithms (which closely reproduce the offline algorithms and require more CPU time) to run at a reduced rate later in the trigger sequence.

Fast algorithms are executed using calorimeter and ID information within the RoI to perform the initial selection and identification of the photon and electron candidates, and achieve early background rejection.

If a particle candidate satisfies the criteria defined for the fast selection, the precision algorithms are executed in the HLT, where access to detector information outside the RoI is possible. These precision online algorithms are similar to their offline counterparts, with the following exceptions: the bremsstrahlung-aware re-fit of electron tracks [33] and electron and photon dynamic, variable-size topo-clusters [31] are not used online; photon candidates are identified using only the calorimeter information online; the online algorithms use \(\langle \mu \rangle \) to assess pile-up, while the number of primary vertices is used offline. In addition to the above, some cell-energy-level corrections are not available online, such as the correction for transient changes in LAr high-voltage [36], or differ in implementation, such as the bunch crossing position-dependent pile-up correction [37, 38].

6.1 Photon and electron triggers at L1

The details of the Run 2 L1 trigger algorithms can be found in Ref. [3], and only the basic elements are described here. The L1 trigger for photons and electrons uses calorimeter information in the central (\(|\eta |<2.5\)) region to build an EM RoI. A sliding window algorithm is used, with a window consisting of \(4\times 4\) trigger towers with granularity \(0.1\times 0.1\) in \(\eta \) and \(\phi \), longitudinally segmented into electromagnetic and hadronic towers. Within the window the algorithm uses the maximum ET from the four possible pairs of nearest-neighbour electromagnetic towers in a \(2 \times 2\) central region; this is used for EM transverse energy reconstruction. The energy of the trigger towers is calibrated at the electromagnetic energy scale (EM scale). This EM scale is not the same as the one used in the offline reconstruction, which can lead to trigger inefficiencies relative to offline reconstruction as discussed in Sect. 8.

A nominal transverse energy threshold is applied (e.g. \(E_{\text {T}}\) > 22 \(\text {GeV }\), denoted by the trigger name EM22). The threshold can also be \(\eta \)-dependent, due to the energy scale depending on \(\eta \), within the granularity of 0.1. Typical variations of the threshold are \(-2\) to \(+3\,\) \(\text {GeV }\) relative to the nominal value. Optionally, a selection to reject hadronic activity can be applied: candidate electrons and photons are rejected if the sum of transverse energies in hadronic towers matched to the \(2\times 2\) central region is at least 1 \(\text {GeV }\) and exceeds \(E_{\text {T}}/23.0-0.2\) \(\text {GeV }\). Finally, an EM isolation requirement can be applied: candidate photons and electrons are rejected if the sum of transverse energies in the 12 towers surrounding the \(2\times 2\) central region in the EM layer is at least 2 \(\text {GeV }\) and exceeds \(E_{\text {T}}/8.0-1.8\) \(\text {GeV }\). No requirements based on hadronic activity or EM isolation are applied above 50 \(\text {GeV }\) of \(E_{\text {T}}\) reconstructed at L1. These additional selections were optimised to maintain a fixed L1 efficiency at the lowest possible rate. The effect of these additional selections on the rate and efficiency is discussed in Sect. 8.

6.2 HLT photon reconstruction and identification for pp data-taking

The HLT fast algorithm reconstructs clusters from the calorimeter cells within the EM RoIs identified by L1. To minimise the HLT latency, the fast algorithm uses only the second layer of the EM calorimeter to find the cell with the largest deposited transverse energy in the RoI. This cell is referred to as the ‘pre-seed’. Nine possible \(3\times 7\) windows (\(\Delta \eta \times \Delta \phi =0.075\times 0.175\)) around the pre-seed cell are checked to ensure that the local maximum, the cluster seed, is found. The final cluster position is obtained by calculating the energy-weighted average cell positions inside a \(3\times 7\) window centred on the cluster seed. To compute the accumulated energy in all EM calorimeter layers, a cluster size of \(3\times 7\) is used in the barrel and a cluster size of \(5\times 5\) in the endcaps. Several corrections, based on the offline reconstruction algorithms, are used at the fast algorithm step in order to improve the resolution of the cluster position and energy.

In this fast reconstruction step, only selections on the cluster \(E_{\text {T}}\) and shower shape parametersFootnote 2 \(R_\mathrm {had}\), \(R_\eta \) and \(E_\mathrm {ratio}\), which have good discrimination power between background and signal, are applied.

In the precision step, offline-like algorithms are used for the reconstruction of calorimeter quantities. After retrieving the cell information from regions slightly larger than the RoI, the precision HLT reconstruction uses the offline sliding-window algorithm to construct clusters [39]. The energy of the clusters is calibrated using a multivariate technique such that the response of the calorimeter layers is corrected in data and simulation [38]. The online photon identification relies on the same cluster shower shapes that are used in the offline algorithms (details are given in Ref. [40]), and three identification WPs are defined: ‘loose’, ‘medium’, and ‘tight’. The ‘medium’ identification is used only in the HLT.

An optional requirement on calorimeter-only isolation in photon triggers uses topo-clusters, similar to the offline isolation calculation [31]. Full-scan topo-cluster reconstruction is needed to compute the energy density of the event on-the-fly in the HLT; this is then used to subtract ambient noise in the isolation cone. An isolation cone of size \(\Delta R=0.2\,(0.4)\) around the photon candidate is used for the very-loose (tight) isolation requirement, denoted by ‘icalovloose’ (‘icalotight’). If the ratio of the transverse energy in the topo-clusters to the transverse energy of the photon candidate is less than 10% (3%, with an energy offset of 2.45 \(\text {GeV }\)), then the photon is considered isolated for ‘icalovloose’ (‘icalotight’) by the HLT. These isolation criteria are over 98% efficient for offline photons satisfying tight isolation. The full-scan topo-cluster reconstruction is executed only once per event at the end of the trigger sequence (as it is very CPU intense) and is common to all isolated triggers and all trigger signatures.

6.3 HLT electron reconstruction and identification for pp data-taking

HLT electron reconstruction also has fast and precision steps. The description below corresponds to the implementation at the end of Run 2 data-taking; modifications made to the initial Run 2 implementation are described in Sect. 10.1.

The fast calorimeter reconstruction and selection steps for electrons have two implementations: a cut-based algorithm and a neural-network-based ‘Ringer’ algorithm. The former algorithm, the same as described above for photons, is used for electron triggers with \(E_{\text {T}}\) \(<15\) \(\text {GeV }\). The Ringer algorithm, described in detail in Sect. 6.3.1, is used for triggering electrons with \(E_{\text {T}}\) \(\ge 15\) \(\text {GeV }\). For both fast algorithms, electron candidates are required to have tracks from the fast track reconstruction step, performed inside the RoI only, matching the corresponding clusters as detailed in Sect. 10.1.

In the precision calorimeter reconstruction step, the cluster reconstruction and calibration are similar to those for photons, and to those used offline in early Run 2 analyses [41]. Precision tracks within the RoI are extrapolated to the second layer of the EM calorimeter and are required to match the clusters within \(|\Delta \eta \text {(track, cluster)}|<0.05\) and \(|\Delta \phi \text {(track, cluster)}|<0.05\) rad. The offline reconstruction uses a looser, asymmetric condition for the matching in \(\phi \) [31] to mitigate the effects of the energy loss due to bremsstrahlung; this leads to some inefficiency at the trigger level. In the precision step, the electron selection relies on a multivariate technique using a LH discriminant with four operating points: ‘lhvloose’, ‘lhloose’, ‘lhmedium’, and ‘lhtight’. The identification in the trigger is designed to be as close as possible to the offline version, but there are a few necessary differences: the discriminating variables used online have different resolutions; the momentum loss due to bremsstrahlung, \(\Delta p/p\), is not accounted for in the online LH. Triggers with ‘nod0’ suffixed to their names do not include the transverse impact parameter relative to the beam-line, \(d_0\), and its significance, \(|d_0/\sigma (d_0)|\), in the online LH: this reduces inefficiency due to the absence of the bremsstrahlung-aware re-fit in the HLT and preserves efficiency for electrons from exotic processes which do not originate at the primary vertex.

An additional, optional requirement of isolation denoted ‘ivarloose’ is also available for electron triggers. This tracking-only isolation is required to satisfy \(p_\mathrm {T}^\text {iso}\) \((\Delta R^\mathrm {var}<0.2)/p_{\text {T}} < 0.10\) and is calculated similarly to the offline isolation working points detailed in Table 3 in Sect. 5.

Some triggers with non-standard electron sequences are also used. For example, triggers with only \(E_{\text {T}}\) requirements applied in the HLT (fast and precision levels) and no tracking requirements are called ‘etcut’ and are used both as high-\(E_{\text {T}}\) unprescaled triggers described in Sect. 10.3 and as prescaled triggers for electron performance studies described in Ref. [31].

6.3.1 Ringer algorithm

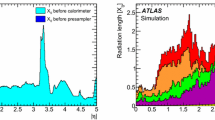

The Ringer algorithm exploits the property of EM showers to develop in the lateral direction in an approximately conical structure around the initial particle. This feature allows the relevant information from the calorimeters to be encoded into quantities describing energy sums (\(\Sigma \)) of all the cells in a concentric ring [42], referred to as ‘rings’, in each calorimeter sampling layer. The rings (\(r_2, \ldots , r_n\)) are rectangular in shape because of the calorimeter cell structure [4] as illustrated in Fig. 2. In the EM calorimeter, these rings are centred around the most energetic cell at each layer, while in the hadronic calorimeter the position of the most energetic cell in the second layer of the EM calorimeter is used as an axis. A hundred rings are defined in total within an RoI. There are \(n=8\) rings for each of the presampler, second, and third layers of the EM calorimeter, 64 rings in the first layer of the EM calorimeter, and 4 rings in each of the three layers of the hadronic calorimeter. The transverse energy deposited in each ring is normalised to the total transverse energy in the RoI.

The concatenated vector of 100 normalised ring transverse energies feeds an ensemble of multilayer perceptron (MLP) neural networks (NN) [43] for each \(E_{\text {T}} \times \eta \) region. The activation function of the hidden layer is a hyperbolic tangent. For the 2017 data-taking, the model parameters were optimised on the simulated \(Z\rightarrow ee\) and background data sets described in Sect. 4. In 2018, this optimisation was performed on the 2017 data. The training procedure and parameters are the same for all specific NNs, except for the number of hidden units in a single-hidden-layer MLP, optimised in the range of 5 to 20 units using tenfold cross-validation efficiency measurements.

The concept of ring-shaped energy reconstruction in a calorimeter slice corresponding to the RoI size for \(|\eta |<1.35\). The most energetic cell (\(r_1\)) is shown in red, while the rings around it alternate between black and white. In this scenario there are some areas not used by the Ringer algorithm calculation: the vertical strip at the right edge of the presampler and layer 1, and the horizontal strip(s) at the lower edge of layers 2 and 3

The NNs are trained and selected for operation with a heuristic technique to avoid local optima and achieve an optimal fake rejection, while maintaining the trigger electron efficiency unchanged relative to the cut-based fast-electron selection. The ensemble composition comprises 20 (25) NNs for 2017 (2018) data-taking, with typically no more than 10 neurons in the hidden layer.

Each discriminant requirement is computed as a linear function of \(\langle \mu \rangle \) to ensure pile-up independence of the signal efficiency. To account for mismodelling in the MC simulations, the parameters of the linear threshold correction were derived using 2016 (2017) collision data for the 2017 (2018) data-taking period.

The Ringer algorithm increases the time taken by the fast calorimeter reconstruction step to 1–2 ms per event, approximately 45\(\%\) slower than the cut-based algorithm. However, it reduces the number of input candidates for the more CPU-demanding fast tracking step (which takes about 64 ms per event) by a factor of 1.5–6. This factor depends on the detailed trigger configuration. Overall, the use of the Ringer algorithm enabled at least a \(50\%\) reduction in the CPU demand for the lowest-threshold unprescaled single-electron trigger. In Run 2, the Ringer algorithm was used only in the electron triggers with \(E_{\text {T}}\) thresholds above 15 \(\text {GeV }\), because of the availability of a large \(Z\rightarrow ee\) event sample in data, useful for the Ringer algorithm validation. Its implementation for the electron triggers with \(E_{\text {T}}\) thresholds below 15 \(\text {GeV }\) was finalized only during 2018, too late to be used for the data-taking. The Ringer algorithm was not used in the photon triggers during Run 2, as they do not have any CPU-demanding track reconstruction steps and gains from its implementation are smaller than for electrons.

6.4 HLT photon and electron reconstruction and identification for heavy-ion data-taking

Triggering on both reference pp and pPb collisions relies on strategies developed for high-pile-up pp data-taking, while for PbPb a dedicated set of triggers is required.

One of the main characteristics of HI collisions is event centrality, which is determined by the total transverse energy measured in the forward calorimeter, FCal \(\Sigma E_{\text {T}} \). Small (large) values correspond to events with a small (large) overlap region of two colliding nuclei. In a PbPb collision, the average background originating from the UE in the calorimeter and ID can affect the performance of the online reconstruction and identification of photons and electrons. Unlike pile-up in pp collisions, the UE background cannot be assumed to change slowly with time; on the contrary, it can be dramatically different event-by-event due to the varying HI collision centrality. The tracking performance is approximately centrality-independent, so the track-related requirements are identical to those for pp collisions.

To maintain centrality-independence of the photon and electron trigger performance, a UE correction is performed in a two-stage approach. First, at the fast calorimeter stage of the HLT, no shower shapes are used for the online selection and only an \(E_{\text {T}}\) requirement is imposed. This allows the rate with which the UE correction is applied to be reduced, while consuming only the resources required for reconstruction in RoIs. Next, the UE correction is evaluated per cell as an average in \(\phi \) for each slice of width \(\Delta \eta = 0.1\) in each calorimeter layer. This calculated average energy is then subtracted from the cluster constituent cells. As a result, the cells in the RoI (which are used as inputs to clustering and downstream identification algorithms) contain no systematic bias due to the UE. Residual fluctuations due to the stochastic nature of the UE remain present.

Due to the small size of the EM cluster, this first-order UE correction is found to be sufficient; the azimuthal modulation originating from the flow phenomenon [44] in the UE can be neglected.

Both the cut-based (photon and electron) and LH (electron) identifications are used for the HI triggers. Their performance is presented in Sect. 11. The cut-based electron identification for HI collisions is similar to that used for the pp data-taking in Run 1 (2010–2012) [1]. Two WPs are defined: ‘loose_ion’ and ‘medium_ion’, based on a subset of variables used in the standard electron LH selection. These working points are strictly inclusive. The ‘medium_ion’ working point uses more variables than ‘loose_ion’ to increase background rejection.

6.5 Trigger naming convention

The ATLAS Run 2 trigger naming convention used is [Trigger level]_[object multiplicity][object type][minimum \(E_{\text{T}}\) value in \(\text{GeV}\)] and, optionally, an additional string detailing other requirements listed in Table 4. Trigger level is L1 or HLT, often omitted for brevity. Possible object types are ‘EM’ for L1 EM cluster, ‘g’ for HLT photon and ‘e’ for HLT electron. Additional options at L1, detailed in Sect. 6.1, are \(\eta \)-dependence of the \(E_{\text {T}}\) threshold (denoted by the letter ‘V’ appended to the trigger name), the hadronic activity veto (denoted by ‘H’) and the EM isolation requirement (denoted by ‘I’). Although each HLT trigger is configured with a L1 trigger as its ‘seed’, the latter is not always mentioned explicitly as part of the trigger name. For example, a trigger with name 2g20_tight_icalovloose_L12EM15VHI is designed to identify at least two photons at the HLT with \(E_{\text {T}}\) \(>20\,\) \(\text {GeV }\) each satisfying ‘tight’ photon identification criteria and calorimeter-only very loose isolation requirements; here the name explicitly mentions the ‘seed’ L1 trigger, which requires two isolated L1 EM clusters with \(\eta \)-dependent threshold centred on \(E_{\text {T}}\) of 15 \(\text {GeV }\).

7 Performance measurement techniques

7.1 Rate measurements

The ATLAS data-taking conditions are archived with a time interval of the order of a minute, which defines a luminosity block. In order to obtain the rate of a given trigger as a function of the instantaneous luminosity [9, 45], individual rate measurements on different luminosity blocks from all data collected in a given year are used. If, for a given rate measurement, the ratio of trigger rate to instantaneous luminosity varies by more than 20% from the average of other measurements, that measurement is not taken into account as an estimator of the rate for that trigger. This avoids averaging rate measurements that fluctuate because of unpredictable and temporary changes of LHC collisions.

7.2 Measurement of the electron trigger efficiency

The electron trigger efficiency, denoted by \(\epsilon _\text {trig}\), can be measured either for electrons at the HLT (including L1) or for EM clusters at L1. It is estimated directly from data using the tag-and-probe method described in detail in Ref. [46]. This method selects, from a known resonance such as \(Z\rightarrow ee\), an unbiased sample of ‘probe’ electrons by using strict selection requirements on the second ‘tag’ object. The efficiency of any given requirement can then be determined by applying it to the probe sample, after accounting for residual background contamination.

The total efficiency, \(\epsilon _\mathrm {total}\), may be factorised as a product of two efficiency terms:

where \(N_\mathrm {all}\) is the number of produced electrons, \(N_\mathrm {trig}\) is the number of triggered electron candidates, \(N_\mathrm {offline}\) is the number of isolated, identified and reconstructed offline electron candidates and \(\epsilon _\mathrm {offline}\) is the offline efficiency [31]. The efficiency of a trigger is computed with respect to a specific offline isolation and identification WP. Therefore, when presenting the results in Sect. 10, several efficiencies per trigger are provided and these correspond to a few representative offline electron selections.

Events with \(Z\rightarrow ee\) decays are collected using unprescaled single-electron triggers (see Sect. 10.1 for details). The tag electron must be an electron identified offline with the tight selection criteria (hereafter called ‘tight offline electron’) associated geometrically with the object that fired the trigger, with \(E_{\text {T}}\) \(>27\,\)GeV and \(|\eta |<2.47\) and outside the transition region between the barrel and the endcaps \(1.37<|\eta |<1.52\). For the electron trigger efficiency measurement, the isolation and identification requirements on the probe are always specified and they have to correspond to the electron offline identification requirements used in an analysis. The background subtraction is performed with so-called \(Z_\mathrm {mass}\) method [46], in which the invariant-mass distribution constructed from the tag–probe pair is used to discriminate electrons from background. The signal efficiency is extracted in a window \(\pm 15\,\)GeV around the Z boson mass [47] and its statistical and systematic uncertainties are derived as described in Ref. [41].

Simulated events need to be corrected to reproduce as closely as possible the efficiencies measured in data. This is achieved by applying ‘an efficiency correction factor’, defined as the ratio of the efficiency measurement in data to that determined in simulated events, to the event weight in simulation. The impact of the choice of \(Z\rightarrow ee\) events for the efficiency measurement, and uncertainties in the background estimation, are assessed by varying the requirements on the selection of both the tag and probe electron candidates and by varying the details of the background subtraction method as detailed in Ref. [41]. The scaling factor and its systematic uncertainty are obtained from the mean and standard deviation, respectively, of the results produced by the set of independent variations of all these parameters. The statistical uncertainty is calculated as the average over the statistical uncertainties in all variations.

7.3 Measurement of the photon trigger efficiency

The photon trigger efficiency at the HLT (including L1), denoted by \(\epsilon ^{\gamma }_{\mathrm {trig}}\), can be measured by two complementary data-driven methods. The Bootstrap (BS) method uses photons triggered by a lower level or unbiased trigger, while the second method uses photons from radiative \(Z\rightarrow \ell \ell \gamma \) decays.

The BS event sample is collected by L1-only triggers or by loose, low-\(E_{\text {T}}\) photon triggers. In the BS method, the photon trigger efficiency can be factorised as the product of two efficiency terms:

The efficiency of the HLT photon trigger relative to the corresponding BS sample efficiency, \(\epsilon _{\mathrm {HLT}|\mathrm {BS}}\), is measured with offline photons on events in the BS sample. The BS sample efficiency, \(\epsilon _{\mathrm {BS}}\), is computed on collision events recorded by a special ‘random’ trigger, which runs at a rate of a few Hz, by comparing the number of the BS events with the number of isolated, identified and reconstructed offline photon candidates in the sample. The background contamination in this sample is large, which could lead to biases towards a lower efficiency estimate. Those biases are expected to be small because the photon trigger efficiency is evaluated with respect to ‘tight’ and isolated offline photons and a few \(\text {GeV }\) above the trigger threshold. The trigger efficiency for background photons fulfilling the ‘tight’ offline identification is also very high, close to the one of signal photons; an additional systematic uncertainty is assigned as described below to account for any potential biases.

The systematic uncertainty of the trigger efficiency is computed as the discrepancy between the efficiency measured in data and in simulated H\(\rightarrow \gamma \gamma \) (high-\(E_{\text {T}}\)) and prompt-photon (low-\(E_{\text {T}}\)) samples. This approach to compute the systematic uncertainties is conservative, as it also includes the discrepancies between simulation and real data (mismodelling). The main underlying assumption is that the trigger efficiency in the MC simulation is close to the trigger efficiency in a pure sample of photons in data. This assumption is supported by the observation of good agreement between the trigger efficiencies in data and simulation for photons from \(Z\) radiative decays, discussed in Sect. 9.

The size of the data sample collected during Run 2 allows the use of photons from radiative Z decays to measure the photon trigger efficiencies. In this method the photon trigger efficiency is measured using a clean sample of prompt, isolated photons with relatively low \(p_{\text {T}} \) from \(Z\rightarrow \ell \ell \gamma \) (\(\ell =e, \mu \)) decays, in which a photon is produced from the final-state radiation of one of the two leptons from the Z boson decay.

Events triggered by the lowest-threshold unprescaled single and double electron and muon triggers are used to select \(Z\rightarrow ee\gamma \) and \(Z\rightarrow \mu \mu \gamma \) event candidates. The sample is selected by requiring events with two opposite-charge leptons (ee or \(\mu \mu \)) with \(p_{\text {T}} >10\) \(\text {GeV }\) and a ‘tight’ photon candidate within \(|\eta |<2.37\), excluding the calorimeter transition region, and with \(E_{\text {T}} >10\) \(\text {GeV }\). The photon candidate is further required to satisfy an isolation WP of interest. Both leptons are required to satisfy the ‘medium’ identification and ‘FCLoose’ isolation criteria, and must have \(|\eta |<2.47\), with \(|z_{0}|<10\) mm and \(|d0/\sigma (d_{0})|<10\). The separation between the photon and each lepton is required to be \(\Delta R>0.2\). Figure 3 shows the distribution of \(m_{\ell \ell }\) vs the three-body mass, \(m_{\ell \ell \gamma }\). The invariant mass of the two leptons must be within \(40<m_{\ell \ell }<83\) \(\text {GeV }\) to reject events in which a \(Z\rightarrow \ell \ell \) decay is produced in association with a photon coming from initial-state radiation. The invariant mass of the three-body system is required to be \(86<m_{\ell \ell \gamma }<96\) \(\text {GeV }\). With these requirements, only photons originating from \(Z\) radiative decays are selected. If more than one \(\ell \ell \gamma \) candidate is found, the one with the three-body mass closest to the Z boson mass is selected.

The invariant mass of the two leptons, \(m_{\ell \ell }\), vs the invariant mass of the three-body system, \(m_{\ell \ell \gamma }\), in \(Z\rightarrow ee \gamma \) and \(Z\rightarrow \mu \mu \gamma \) decays in 2017 pp data. Events with a photon and a Z\(\rightarrow \ell \ell \) decay are visible as a horizontal band, which peaks around \(m_{\ell \ell } = 90\) \(\text {GeV }\) for three-body invariant masses above 96 \(\text {GeV }\), and can be easily separated from \(Z\) radiative decay events, which are concentrated in a vertical band around \(m_{\ell \ell \gamma } = 90\) \(\text {GeV }\)

The statistical uncertainty associated with the \(Z\) radiative decay method is calculated as a confidence interval of a Bayesian estimate with Jeffrey’s prior [48]. The systematic uncertainty is estimated by following the strategy used in the \(Z\rightarrow ee\) tag-and-probe method for electron measurements described in Sect. 7.2. In this case, the two leptons are the tags, and the photon is the probe. The systematic uncertainty is estimated from variations in the trigger efficiency measurement resulting from changing the requirements on the leptons and on the dilepton and three-body systems. The requirement on the invariant mass of the dilepton system is varied from \(30<m_{\ell \ell }<83\) \(\text {GeV }\) to \(50<m_{\ell \ell }<90\) \(\text {GeV }\). The three-body system mass requirement is varied from \(65<m_{\ell \ell \gamma }<105\) \(\text {GeV }\) to \(80<m_{\ell \ell \gamma }<95\) \(\text {GeV }\). In addition, when considering the electron channel, the identification of the tags is changed from ‘tight’ to ‘medium’ for one or both electrons.

Figure 4 shows a comparison between the BS and \(Z\) radiative decay methods for ‘medium’ photon triggers in 2018. The small difference in performance for the turn-on is due to the different purities of the samples: there are significant backgrounds in the BS sample, and almost inexistent backgrounds in the \(Z\) radiative decay measurement, leading to slightly higher efficiency computed by the \(Z\) radiative decay method. This is expected, as the efficiency for the trigger to select the background present in the BS sample is lower than the efficiency for the trigger to select real photons. Typically, physics analyses use the photon triggers to select objects with \(E_{\text {T}}\) at least 5 \(\text {GeV }\) above the trigger threshold. In that regime, the efficiency measurements of both methods give compatible results. The \(Z\) radiative decay method provides a data-driven sample of photons with very high purity to compute the efficiency for trigger thresholds below 60 \(\text {GeV }\); above this value the BS method is used.

The photon trigger efficiency as a function of the offline photon \(E_{\text {T}} \) for both legs of the primary diphoton trigger in the 2018 pp data. The measurement using the BS method (open markers) is compared with that using the \(Z\) radiative decay (filled markers) method. The efficiency is computed with respect to offline photons satisfying ‘tight’ identification criteria and a ‘calorimeter-only tight’ isolation requirement. Offline photon candidates in the calorimeter transition region \(1.37< |\eta | < 1.52 \) are not considered. The error bars indicate statistical uncertainties

8 L1 trigger evolution and performance

Table 5 shows the lowest-threshold unprescaled single-object and diobject L1 EM triggers in the different data-taking periods during Run 2. Figure 5 shows the L1 rates for the single-object and diobject EM calorimeter triggers during Run 2. Among the triggers shown there are two single-object triggers not used in Run 2: the L1_EM20VHI trigger, to highlight the additional rejection from the EM isolation requirement, and L1_EM24VHI, which was a ‘backup’ trigger for single EM objects. The single-object L1 rates in Fig. 5a are well described by a linear fit as a function of luminosity, with an approximately zero intercept, indicating a negligible contribution from effects not related to pp collisions, as expected for such a narrow RoI window. For single-object L1 EM triggers with \(E_{\text {T}}\) in the range 20–24 \(\text {GeV }\), the rate is reduced by approximately 25% when the threshold is raised by \(2\,\) \(\text {GeV }\). For diobject L1 EM triggers this reduction in rate depends on the threshold: for a 5 \(\text {GeV }\) increase from L1_2EM10VH (L1_2EM15VH) a reduction of 90% (50%) is achieved. An additional EM isolation requirement (I) leads to a consistent rate reduction of about 44% per leg for single-object (L1_EM20VH) and diobject (L1_2EM15VH) triggers and a pile-up-dependent efficiency loss of at most 5% up to 50 \(\text {GeV }\), as shown in Fig. 6. No isolation requirements are applied above this \(E_{\text {T}}\) value.

Efficiency of L1 single EM object triggers as a function of a the offline electron \(E_{\text {T}}\) and b pile-up. The offline reconstructed electron is required to pass a likelihood-based ‘tight’ identification. The efficiencies are measured with a tag-and-probe method in \(Z\rightarrow ee\) data events, using offline monitoring tools described in Sect. 12. The error bars show the statistical uncertainties only. No background subtraction is applied, as the effect is expected to be negligible. For b, only offline candidates with \(E_{\text {T}}\) values at least 1 \(\text {GeV }\) above the corresponding trigger threshold are considered

Dedicated combined-object triggers such as three EM clusters or an EM cluster with a muon, \(\tau \)-lepton, jet, or missing transverse momentum are also implemented at L1, allowing L1 EM trigger thresholds to be lowered to 7 \(\text {GeV }\). Some L1 triggers do not require an additional EM object for their rate reduction. In this case, their HLT photon/electron reconstruction is seeded by the lowest available L1 EM threshold, which is 3 \(\text {GeV }\).

Additional topological requirements (invariant mass, \(\Delta R\), etc.) can also be applied to L1 triggers to further reduce the rate. For example, prescaled triggers used to collect \(J/\psi \rightarrow ee\) events for the low-\(E_{\text{T}}\) electron performance studies [31, 38, 41] have at least one L1 EM threshold as low as 3 \(\text{GeV}\) and a requirement on the invariant mass of the EM object pairs to lie between 1 and 5 \(\text {GeV }\)[49]. The latter requirement leads to trigger rate reduction factors of 4–9, depending on the exact trigger threshold configuration.

9 Photon trigger evolution and performance in pp data-taking

9.1 Evolution of photon triggers in Run 2

Table 6 shows the lowest-threshold unprescaled photon triggers in different data-taking periods during Run 2. The ‘loose’ and ‘medium’ identification requirements remained unchanged throughout 2015–2018. An optimisation of the selection for the online ‘tight’ definition was performed at the end of 2017 in order to synchronise with a reoptimised offline ‘tight’ photon selection. The calorimeter-only isolation requirement (icalovloose) was implemented in the HLT for tight diphoton triggers for the first time in 2017.

9.2 Primary single-photon and diphoton triggers

HLT output rate as a function of instantaneous luminosity for the primary a single-photon triggers and b diphoton triggers in 2015–2018. The changes between years are detailed in Sect. 9.1

The lowest-threshold unprescaled single-photon trigger is primarily designed to trigger on high-\(E_{\text {T}}\) photons in searches for new phenomena beyond the Standard Model. This primary single-photon trigger uses the ‘loose’ identification requirement, with no isolation applied. Figure 7 shows the HLT trigger rates for photon triggers as a function of instantaneous luminosity. The \(E_{\text {T}}\) threshold of the single-photon trigger was increased from 120 to 140 \(\text {GeV }\) in 2016 to keep its acceptance rate below 50 Hz, as shown in Fig. 7a.

The efficiencies of the single-photon triggers in 2015–2018, measured with the BS method, are shown in Fig. 8 as a function of \(E_{\text {T}} \) and \(\eta \). The total uncertainties, shown as vertical bars, are dominated by systematic uncertainties, especially differences between data and Monte Carlo simulation. The trigger efficiency measurement has a total uncertainty of the order of 1% for photons with \(E_{\text {T}}\) values 5 \(\text {GeV }\) above the trigger threshold, and an uncertainty of less than 0.1% for photons at least 10 \(\text {GeV }\) above the trigger threshold.

Efficiencies of single-photon triggers in 2015–2018 as a function of the offline photon a \(E_{\text {T}} \) and b \(\eta \). The changes between years are detailed in Sect. 9.1. The efficiency is computed with respect to offline photons satisfying ‘tight’ identification criteria and a ‘calorimeter-only tight’ isolation requirement. The ratios of data to MC simulation efficiencies are also shown. The total uncertainties, shown as vertical bars, are dominated by systematic uncertainties. Offline photon candidates in the calorimeter transition region \(1.37< |\eta | < 1.52 \) are not considered. For b, only offline candidates with \(E_{\text {T}}\) values 5 \(\text {GeV }\) above the corresponding trigger threshold are used

Primary diphoton triggers are mainly designed for efficient selection of events with Higgs boson candidates in the diphoton decay channel. Trigger \(E_{\text {T}}\) thresholds of 35 and 25 \(\text {GeV }\) for the leading and subleading photons allow the collection of diphoton events with invariant masses low enough for good background modelling for resonances above 120 \(\text {GeV }\), such as the Higgs boson [50, 51]. During 2015 and 2016 ‘loose’ identification was used at the HLT for primary diphoton triggers. During 2017–2018, ‘medium’ identification was used in order to keep the primary diphoton trigger rate below 20 Hz at higher values of instantaneous luminosity, as shown in Fig. 7b. The rate of the primary diphoton triggers shows a linear dependence on the instantaneous luminosity. As shown in Table 6, diphoton triggers with ‘loose’ identification were maintained at higher trigger \(E_{\text {T}}\) thresholds (50 \(\text {GeV }\)).

To measure the efficiency of primary diphoton triggers in data, photons from \(Z\) radiative decays are used. Trigger efficiencies for each of the legs of the diphoton trigger are measured separately and then combined at the analysis level. This approach is used for all the multi-object and combined triggers. The efficiencies for the 25 \(\text {GeV }\) leg of the primary diphoton triggers in 2015–2018 are shown in Fig. 9. Slightly lower efficiencies are observed in 2017–2018 due to the tightening of the online photon identification from the ‘loose’ to ‘medium’ WP: it is \(\sim95\%\) efficient for events with offline ‘tight’ isolated photons with \(E_{\text {T}}\) at least 5 \(\text {GeV }\) above the trigger threshold. Trigger efficiencies show no significant dependence on \(\eta \) or \(\langle \mu \rangle \), remaining close to 100% during most of Run 2; the 2017 efficiency is the lowest of all years due to a different LHC bunch structure. The total uncertainties, shown as vertical bars, are dominated by statistical uncertainties. The ratios of efficiency measured in data to that in MC simulation are shown in Fig. 9 as functions of \(E_{\text {T}} \), \(\eta \) and \(\langle \mu \rangle \), and are close to 1 in all cases, confirming good data/MC simulation agreement and validating the systematic uncertainty procedure for the BS method.

Efficiencies of the 25 \(\text {GeV }\) leg of primary diphoton triggers in 2015–2018 as a function of the offline photon a \(E_{\text {T}} \), b \(\eta \) and c \(\langle \mu \rangle \). The changes between years are detailed in Sect. 9.1. The ratios of data to MC simulation efficiencies are also shown. The efficiency is computed with respect to offline photons satisfying ‘tight’ identification criteria and a ‘calorimeter-only tight’ isolation requirement. Offline photon candidates in the calorimeter transition region \(1.37< |\eta | < 1.52\) are not considered. For b and c, only offline candidates with \(E_{\text {T}} >30\) \(\text {GeV }\) are used. The error bars indicate statistical and systematic uncertainties combined in quadrature

9.3 Tight diphoton triggers for searches for low-mass resonances

Diphoton triggers with lower \(E_{\text {T}}\) thresholds and tighter identification criteria are designed to collect events for beyond the Standard Model low-mass diphoton resonance searches [52]. These searches require the trigger \(E_{\text {T}}\) thresholds to be kept symmetric and as low as possible. Run 2 trigger thresholds allow searches to reach diphoton invariant masses down to \(\sim \) 60 \(\text {GeV }\). These triggers are constrained by both the L1 and HLT rates. The L1_2EM15VH threshold was used in 2015–2016 and L1_2EM15VHI, which includes EM isolation at L1, was used in 2017–2018. The HLT rate for these triggers was about 16 Hz as shown in Fig. 10. The HLT thresholds were kept at 20 \(\text {GeV }\) in 2015, and then were increased to 22 \(\text {GeV }\) as the peak luminosity rose above \(1.2\times 10^{34}\,\hbox {cm}^{-2}\hbox { s}^{-1}\) in 2016. The use of the topo-cluster-based calorimeter isolation in the HLT allowed the thresholds to be lowered back to 20 \(\text {GeV }\) for the 2017–2018 data-taking period, despite the higher instantaneous luminosity and more challenging pile-up conditions.

The HLT output rate as a function of instantaneous luminosity for tight diphoton triggers in 2015–2018. The changes between years are detailed in Sect. 9.1

Figure 11 shows the low-mass diphoton trigger efficiencies as a function of \(E_{\text {T}}\), \(\eta \) and pile-up. The efficiency is computed with the \(Z\) radiative decay method for a single photon trigger leg of the diphoton trigger with respect to offline photons satisfying the tight identification criteria and the calorimeter-only tight isolation requirement. Slightly lower efficiency is observed for 2017 triggers due to a different LHC bunch structure as well as loosening of the offline tight photon selection, which was applied online only for the 2018 data-taking period. Triggers in 2017–2018 also suffer from some inefficiency due to L1 isolation up to \(\sim \) 50 \(\text {GeV }\), as discussed in Sect. 8. The isolated trigger g20_tight_icalovloose_L1EM15VHI exhibits a degradation in efficiency of 4–5% when \(\langle \mu \rangle \) rises from 20 to 60; this effect is visible in Fig. 11c. Above \(\langle \mu \rangle\sim\)55, the trend to lower efficiency continues and the statistical uncertainty becomes large. The reoptimisation of the online tight identification selection criteria improved the efficiency of these triggers in 2018 relative to 2017 at \(\langle \mu \rangle \) values above \(\sim \) 40.

Evolution of efficiencies for tight diphoton trigger legs as a function of the offline photon a \(E_{\text {T}} \), b \(\eta \), and c \(\langle \mu \rangle \) during Run 2. The changes between years are detailed in Sect. 9.1. The efficiency is computed with respect to offline photons satisfying tight identification criteria and the calorimeter-only tight isolation requirement. The ratios of data to MC simulation efficiencies are also shown. The total uncertainties, shown as vertical bars, are dominated by statistical uncertainties. Offline photon candidates in the calorimeter transition region \(1.37< |\eta | < 1.52 \) are not considered. For b and c, only offline candidates with \(E_{\text {T}}\) values 5 \(\text {GeV }\) above the corresponding trigger threshold are used

10 Electron trigger evolution and performance in pp data-taking

10.1 Evolution of electron triggers in Run 2

The evolution of the Run 2 electron trigger thresholds and identification requirements for the main unprescaled triggers is summarised in Table 7.

In addition to the threshold increases, there were also changes in the underlying electron configuration and selection requirements as summarised in Table 8. These changes are not always reflected in the trigger names. For example, the Ringer algorithm (described in Sect. 6.3.1) was introduced in 2017. The additional background rejection allowed looser fast electron and precision calorimeter selections. In particular, for the latter step, in 2015–2016 there was a selection which relied on a multivariate technique using a LH discriminant, constructed similarly to the standard offline precision selection one, but based only on calorimetric variables. This discriminant had \(\sim \) 4% inefficiency relative to the offline selection. This inefficiency was removed in 2017–2018 by moving to a simpler requirement based only on \(E_{\text {T}}\) .

The 2015 and 2016 pdfs for the electron LH were derived from simulation samples described in Sect. 4. The pdfs for the trigger electrons with \(E_{\text {T}}\) below (above) 15 \(\text {GeV }\) were determined with \(J/\psi \rightarrow ee\) (\(Z\rightarrow ee\)) MC samples, and corrected for differences between data and simulation [41]. The 2017 pdfs for electrons and background above 15 \(\text {GeV }\) were derived from data as detailed in Ref. [31]; pdf ‘smoothing’ was also introduced online for all triggers. The 2018 electron data-driven pdfs for all working points except the ‘medium’ one were updated with 2017 data, maintaining the original selection criteria and optimising for higher pile-up conditions (\(\langle \mu \rangle \) up to 100). The 2018 pdfs for electrons with \(E_{\text {T}}\) below 15 \(\text {GeV }\) were also derived from data as detailed in Ref. [31].

10.2 Ringer algorithm performance

In 2017, triggers collected data online simultaneously with and without use of the Ringer algorithm, allowing an evaluation of its performance. For \(Z\rightarrow ee\) decays, no difference in efficiency is observed, as shown for two triggers in Fig. 12. However, some special cases (such as events with merged electrons coming from decays of boosted dibosons) are found to suffer losses in efficiency as a result of using the Ringer algorithm, as is shown in Fig. 13. The efficiency drops for \(E_{\text {T}} >400\) \(\text {GeV }\) because the two clusters begin to overlap, but at very high \(E_{\text {T}}\) the two clusters become so close that they behave as a single cluster and all triggers become efficient again. For the \(E_{\text {T}}\) range above 300 \(\text {GeV }\), a trigger with only an \(E_{\text {T}}\) selection in the HLT, e300_etcut, is available.

The efficiency of electron triggers with the Ringer algorithm and with the cut-based algorithm as a function of the offline electron a \(E_{\text {T}}\) , b \(\eta \) and c pile-up. Efficiency is given with respect to offline tight identification working point. For b and c, only offline candidates with \(E_{\text {T}} >29\) \(\text {GeV }\) are used

The efficiency for electrons from \(gg\rightarrow \mathrm {radion\,(3\,\text {TeV }{})}\rightarrow VV\rightarrow ee qq\) as a function of a the offline electron \(E_{\text {T}}\) and b \(\Delta R\) between two electrons. Efficiency is given with respect to offline loose identification and the FCLoose isolation working point. For b, only offline candidates with \(E_{\text {T}} >400\) \(\text {GeV }\) are used

10.3 Single-electron triggers

Dependence of the trigger rate on the luminosity for the lowest-threshold unprescaled isolated single-electron triggers in 2015–2018. The changes between years are detailed in Sect. 10.1

One of the main features of the Run 2 trigger menu is the presence of the unprescaled single-electron trigger \(E_{\text {T}}\) threshold of 24 \(\text {GeV }\) for 2015 and 26 \(\text {GeV }\) for 2016–2018. This single-electron trigger ensures the collection of the majority of the events with leptonic W and Z boson decays, which are present in a wide range of measurements and searches in ATLAS. Although the threshold of this trigger is mainly constrained by the L1 bandwidth, as discussed in Sect. 8, the need for a low threshold and HLT rate places strong constraints on the tightness of the identification used by this trigger in the HLT. Relying on this trigger provides a simple and inclusive strategy, widely used in the ATLAS physics programme, at a cost of about \(20\%\) of the total L1 and HLT rate.

Figure 14 shows the rates for the lowest-threshold unprescaled isolated single-electron triggers used during Run 2 as a function of the instantaneous luminosity. The LH selection (‘lhtight’) of the lowest-threshold unprescaled isolated single-electron trigger is tuned for a given rate, which remained unchanged in 2016–2018.

The electron trigger candidates originate from various processes, as shown in Fig. 15. This shows the lowest-threshold unprescaled isolated single-electron trigger rate as a function of the HLT electron \(E_{\text {T}}\)-threshold value, broken down by process. The total rate is measured in a data set collected at a constant instantaneous luminosity of \(8\times 10^{33}\,\hbox {cm}^{-2}\hbox { s}^{-1}\) at \(\sqrt{s}=13\) \(\text {TeV }\), while the individual contributions from W, Z and multijet production are estimated with MC simulation. The dominant uncertainty in the multijet rate is evaluated with a data-driven technique: the rate as a function of \(E_{\text {T}}\) is obtained in a multijet-enriched region by inverting the HLT track-based electron isolation, and the bin-by-bin disagreement between data and MC simulation is applied as a systematic uncertainty of the multijet process. The total expected rate is in agreement with the measured value for all the thresholds considered. Most of the rate comes from physics processes of interest such as W and Z production, while a significant but not dominant background comes from jets misidentified as electrons.

Dependence of the single-electron-trigger rates on the luminosity for a lowest-threshold unprescaled, non-isolated and b loose unprescaled single-electron triggers in 2015–2018. The changes between years are detailed in Sect. 10.1

At higher \(E_{\text {T}}\) , additional triggers with no isolation requirements and looser identification are introduced. The rates for the lowest-threshold unprescaled, non-isolated triggers with a requirement of \(E_{\text {T}}\) above 60 \(\text {GeV }\) and with ‘lhmedium’ identification are shown in Fig. 16a. The rates for the loose, unprescaled triggers with the ‘lhloose’ identification working point and \(E_{\text {T}}\) above 120–140 \(\text {GeV }\) are shown in Fig. 16b. These higher-\(E_{\text {T}}\) triggers have rate reductions of one and two orders of magnitude, respectively, compared with the lowest-threshold unprescaled isolated single-electron trigger. The three single-electron triggers, the exact configuration for which is detailed in Table 7, are used simultaneously in a typical analysis selection, allowing an event to be selected if it passes any of them. This configuration is called the ‘single-electron trigger combination’. There is also a very high \(E_{\text {T}}\) trigger, e300_etcut, running at a rate of up to 5 Hz at \(2\cdot 10^{34}\hbox { cm}^{-2}\hbox { s}^{-1}\). This trigger allows the collection of an unbiased sample of events with very high energy deposits in the EM calorimeter, as discussed in Sect. 10.2.

Evolution of the single-electron trigger combination efficiency as a function of the offline electron a \(E_{\text {T}}\) and b \(\eta \) during Run 2. The changes between years are detailed in Sect. 10.1. Efficiency is given with respect to offline tight identification and the FCTight isolation working point. The ratios of data to MC simulation efficiencies are also shown. The error bars indicate statistical and systematic uncertainties combined in quadrature. For b, only offline candidates with \(E_{\text {T}}\) values at least 1 \(\text {GeV }\) above the corresponding trigger threshold are used

The evolution of the single-electron trigger combination efficiency in 2015–2018 is shown in Fig. 17. The offline electron is required to pass the tight identification and FCTight isolation requirements. The FCTight isolation requirement is chosen because it is the only one which has a more restrictive isolation configuration than is used online. The sharper efficiency turn-on as a function of \(E_{\text {T}}\) in 2015 shown in Fig. 17a is due to a looser identification requirement (‘lhmedium’ versus ‘lhtight’ from 2016), a lower \(E_{\text {T}}\) threshold (24 \(\text {GeV }\) versus 26 \(\text {GeV }\) from 2016) and no isolation requirement. Although similar identification, isolation, and \(E_{\text {T}}\) requirements are imposed in the single-electron triggers in 2016–2018, some inefficiency at \(E_{\text {T}} {} < 60\,\) \(\text {GeV }\) is observed in 2016. This is explained by the different electron trigger configuration used in 2016, in particular the inefficiency of the calorimeter-only LH selection at the precision step. In 2015–2016, triggers used simulation-based LH and were optimised relative to a different offline selection [41], which results in some inefficiency; however, from 2017 a data-driven likelihood selection and introduction of a looser fast selection with the Ringer algorithm recover the trigger efficiency at \(E_{\text {T}} {}<60\) \(\text {GeV }\). The main remaining sources of inefficiency are the L1 electromagnetic isolation requirements discussed in Sect. 8. As shown in Fig. 17b, the single-electron trigger combination efficiency is lower in the \(1.37<|\eta |<1.52\) and \(|\eta |>2.37\) regions, where a significant amount of inactive material is present. Further, detailed investigation into the sources of the inefficiency relative to the offline selection is discussed below.

The MC simulation efficiency correction factors, defined in Sect. 7.2 and shown in the lower panels of Fig. 17, are as large as 18% close to the trigger \(E_{\text {T}}\) threshold and at most 4% above 40 \(\text {GeV }\). Their \(\eta \)-dependence is fairly smooth for 2015 and 2017–2018, with typical values of less than 4% (11%) outside (inside) the \(|\eta |>2.37\) region. These efficiency correction factors are measured with a typical precision of 0.1%.

Figure 18 shows the trigger efficiency dependence on pile-up. This was reduced towards the end of Run 2. The residual dependence is caused by the isolation requirements both in the HLT and at L1.

Evolution of the single-electron trigger combination efficiency as a function of the pile-up during Run 2, showing measurements in data only. The changes between years are detailed in Sect. 10.1. The efficiency is given with respect to offline tight identification and the FCTight isolation working point. Background subtraction is not applied, as the effect is expected to be negligible. Poorly populated bins are removed. Only offline candidates with \(E_{\text {T}}\) values at least 1 \(\text {GeV }\) above the corresponding trigger threshold are used. The error bars indicate statistical and systematic uncertainties combined in quadrature

Sources of inefficiency for the e26_lhtight_nod0_ivarloose and e60_lhmedium_nod0 triggers relative to the offline reconstruction and the corresponding L1 requirements (EM22VHI) are shown in Table 9. The sources are broken down for each of the selection steps in the HLT. A description of the steps is provided in Sect. 6.3. The offline reconstructed electron is required to have \(E_{\text {T}} >27\) (61) \(\text {GeV }\) and pass the ‘lhtight’ identification. The inefficiencies are determined by the percentage of candidates that pass the offline identification, but fail the online identification at the indicated step, measured with a tag-and-probe method using \(Z \rightarrow ee\) decays providing approximately \(25\,000\) and \(15\,000\) suitable probe electrons, respectively. The sizes of the contributions of the individual selection steps to the overall inefficiency depend on the \(E_{\text {T}}\) of the electron and on the tightness of the selection requirements. The dominant source of inefficiency for the lowest-threshold unprescaled isolated trigger is the electron identification, while for the lowest-threshold unprescaled non-isolated trigger, the sources of inefficiency are more diverse. These inefficiencies are driven by differences between the online and offline reconstruction criteria described in Sect. 6.

10.4 Dielectron triggers

Dielectron triggers allow the use of electron \(E_{\text {T}}\) thresholds at least 9 \(\text {GeV }\) below those of the single-electron triggers and looser identification and isolation requirements with only a very small increase in HLT rate. The major constraint for dielectron triggers comes from their L1 \(E_{\text {T}}\) thresholds, while the corresponding HLT rates, shown in Fig. 19, are of the order of 10 Hz, which allow triggers with a very loose selection in the HLT to be kept. The L1_2EM15VH threshold had to be increased to L1_2EM20VH due to rate considerations in 2017. An additional set of dielectron triggers which start from L1_2EM15VHI was introduced.

Dependence of the dielectron trigger rates on the luminosity in 2015–2018. The changes between years are detailed in Sect. 10.1. Open markers represent L1 triggers with no EM isolation (L1 no-iso), while filled markers represent EM isolated L1 triggers (L1 iso)

The efficiencies of the dielectron triggers as a function of the offline electron \(E_{\text {T}}\) shown in Fig. 20a are calculated for a single electron trigger leg of the dielectron trigger. Thus for the 2015 dielectron trigger, 2e12_lhloose, the efficiency of e12_lhloose is shown. The dielectron trigger had a lower \(E_{\text {T}}\) threshold in 2015, and a slightly tighter identification point (‘lhloose’ instead of ‘lhvloose’), which results in a different efficiency curve. The dielectron triggers with an \(E_{\text {T}}\) threshold of 17 \(\text {GeV }\) have a lower efficiency in 2017 and 2018 than in previous years for \(E_{\text {T}}\) below 60 \(\text {GeV }\). This is due to the L1 seed, which has an electromagnetic isolation requirement. To recover the lost efficiency, a combination of a lower-\(E_{\text {T}}\) trigger (isolated at L1) and a higher-\(E_{\text {T}}\) trigger (with only the L1 hadronic veto applied) is typically used in ATLAS physics analyses. The \(\eta \)-dependence of the efficiencies of the dielectron trigger legs is shown in Fig. 20b. The efficiency shown is lower in the \(1.37<|\eta |<1.52\) and \(|\eta |>2.37\) regions, similar to the single-electron triggers. Outside these regions, efficiencies of dielectron triggers without L1 EM isolation are about 5% lower in the endcaps than in the barrel region, while those for triggers with L1 EM isolation have at most 3% variations.

Evolution of efficiencies for dielectron trigger legs as a function of the offline electron a \(E_{\text {T}}\) and b \(\eta \) during Run 2, showing measurements in data only. The changes between years are detailed in Sect. 10.1. The efficiency is given with respect to the loose offline identification and the FCLoose isolation working point. For b, only offline candidates with \(E_{\text {T}}\) values 1 \(\text {GeV }\) above the corresponding trigger threshold are used. The error bars indicate statistical and systematic uncertainties combined in quadrature

Figure 21 shows the dielectron trigger efficiency as a function of pile-up. It decreases slightly with \(\langle \mu \rangle \) for non-isolated L1 triggers, and has a much stronger \(\langle \mu \rangle \) dependence (due to the L1 electromagnetic isolation requirement) for the isolated L1 triggers.